Introduction

Norconex Web Crawler is a full-featured, open-source web crawling solution meticulously crafted to parse, extract, and index web content. The Crawler is flexible, adaptable and user-friendly, making it a top-notch selection for extracting data from the web.

As the volume and complexity of web crawling tasks increase, organizations face challenges in efficiently scaling the Crawler to meet organizational needs. Scaling effectively involves addressing issues related to configuration management, resource allocation, and the handling of large data sets to enable seamless scalability while maintaining data quality and integrity.

In this blog post you will learn how to handle configuration management for medium to large Crawler installations.

The Problem

Norconex Web Crawler only needs to be installed once, no matter how many sites you’re crawling. If you need to crawl different websites requiring different configuration options, you will likely need multiple configuration files. And as Crawling needs further grow, yet more configuration files will be needed. Some parts of these configuration files will inevitably have common elements as well. How can you minimize the duplication between configs?

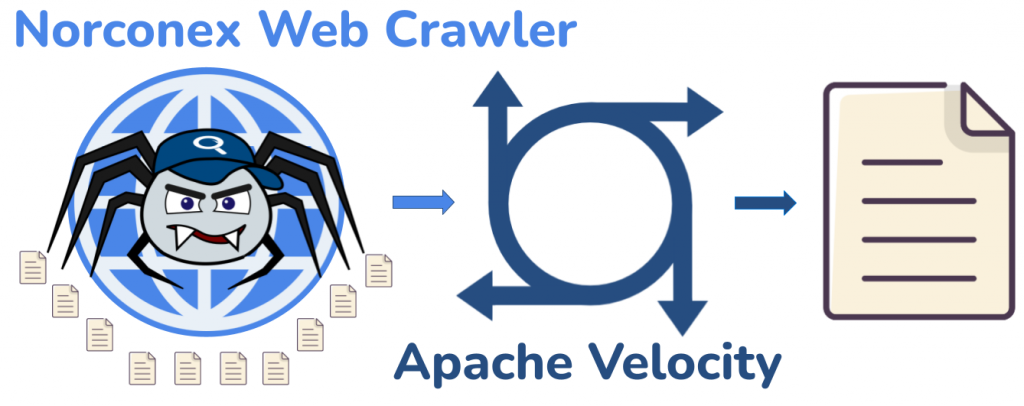

The Solution: Apache Velocity Templates

Norconex Web Crawler configuration is not a plain XML file, but rather, a Apache Velocity template. Broadly speaking, the configuration file is interpreted by the Velocity Engine before being applied to the Crawler.

You can leverage the Velocity Engine to dynamically provide the appropriate values. The following sections walk you through exactly how to do so.

Variables

To keep things simple, consider a crawling solution that contains just 2 configuration files; one for siteA and one for siteB.

Note: This scenario is for demonstration purposes only. If you only have 2 sites to crawl, the following approach is not recommended.

Default configurations

The configurations for the 2 sites may look as follows.

siteA configuration

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE xml>

<httpcollector id="collector-siteA">

<workDir>./workDir</workDir>

<crawlers>

<crawler id="crawler-siteA">

<startURLs stayOnDomain="true">

<url>www.siteA.com</url>

</startURLs>

<maxDepth>-1</maxDepth>

<!-- redacted for brevity -->

</crawler>

</crawlers>

</httpcollector>siteB configuration

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE xml>

<httpcollector id="collector-siteB">

<workDir>./workDir</workDir>

<crawlers>

<crawler id="crawler-siteB">

<startURLs stayOnDomain="true">

<url>www.siteB.com</url>

</startURLs>

<maxDepth>0</maxDepth>

<!-- redacted for brevity -->

</crawler>

</crawlers>

</httpcollector>As you can probably see, just 4 differences exist between the two configurations:

- httpcollector id

- crawler id

- StartURLs

- maxDepth

The common elements in both configurations should be shared. Below, you’ll learn how to share them with Velocity variables.

Optimized configuration

The following steps will optimize the configuration by extracting dynamic data to dedicated files thereby removing duplication.

First, extract unique items into their respective properties file

siteA.properties

domain=www.siteA.com

maxDepth=-1siteB.properties

domain=www.cmp-cpm.forces.gc.ca

maxDepth=0Then, add variables to the Crawler configuration and save it as my-config.xml at the root of your Crawler installation. The syntax to add a variable is ${variableName}.

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE xml>

<httpcollector id="collector-${domain}"> <!-- variable added here -->

<workDir>./workDir</workDir>

<crawlers>

<crawler id="crawler-${domain}"> <!-- variable added here -->

<startURLs stayOnDomain="true">

<url>${domain}</url> <!-- variable added here -->

</startURLs>

<maxDepth>${maxDepth}</maxDepth> <!-- variable added here -->

<!-- redacted for brevity -->

</crawler>

</crawlers>

</httpcollector>With the variables in place in the Crawler config, the variables file simply needs to be specified to the Crawler start script. This is accomplished with the -variables flag, as follows.

siteA

>collector-http.bat start -clean -config=my-config.xml -variables=siteA.propertiessiteB

>collector-http.bat start -clean -config=my-config.xml -variables=siteB.propertiesThe Crawler will replace the variables in the config XML with what it finds in the .properties file.

The example above is for a Windows machine. If you are on Linux, use the collector-http.sh script instead.

Tip: If you’re interested in seeing what the config will look like after variables are replaced, use the configrender option.

>collector-http.bat configrender -c=my-config.xml -variables=siteA.properties -o=full_config.xmlSo far, we have only seen the basics of storing data in variables. But what if siteA and siteB needed to commit documents to separate repositories? Below you’ll see how to leverage the power of Apache Velocity Engine to accomplish just that.

Importing Files

Using variables goes a long way toward organizing multiple configuration files. You can also dynamically include chunks of configuration by utilizing Velocity’s #parse() script element.

To demonstrate, consider that siteA is required to commit documents to Azure Cognitive Search and siteB to Elasticsearch. The steps below will walk you through how to accomplish just that.

First, you need 2 committer XML files.

committer-azure.xml

<committer class="AzureSearchCommitter">

<endpoint>https://....search.windows.net</endpoint>

<apiKey>...</apiKey>

<indexName>my_index</indexName>

</committer>committer-es.xml

<committer class="ElasticsearchCommitter">

<nodes>https://localhost:9200</nodes>

<indexName>my_index</indexName>

</committer>Then, augment the Crawler config (my-config.xml), and add the <committers> section

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE xml>

<httpcollector id="collector-${domain}">

<workDir>./workDir</workDir>

<crawlers>

<crawler id="crawler-${domain}">

<startURLs stayOnDomain="true">

<url>${domain}</url>

</startURLs>

<maxDepth>${maxDepth}</maxDepth>

<!-- add this section -->

<committers>

#parse("${committer}")

</committers>

</crawler>

</crawlers>

</httpcollector>Finally, the .properties files must be updated to specify the committer file we required for each.

siteA.properties

domain=www.siteA.com

maxDepth=-1

committer=committer-azure.xmlsiteB.properties

domain=www.siteB.com

maxDepth=0

committer=committer-es.xmlNow you can use the configrender option to see the final configuration for each site.

siteA

>collector-http.bat configrender -c=my-config.xml -variables=siteA.properties -o=full_config.xmlRelevant snippet from full_config.xml.

<committers>

<committer class="com.norconex.committer.azuresearch.AzureSearchCommitter">

<endpoint>https://....search.windows.net</endpoint>

<apiKey>...</apiKey>

<indexName>my_index</indexName>

<!-- redacted for brevity -->

</committer>

</committers>siteB

>collector-http.bat configrender -c=my-config.xml -variables=siteB.properties -o=full_config.xmlRelevant snippet from full_config.xml.

<committers>

<committer class="com.norconex.committer.elasticsearch.ElasticsearchCommitter">

<nodes>https://localhost:9200</nodes>

<indexName>my_index</indexName>

<!-- redacted for brevity -->

</committer>

</committers>And there you have it! With those simple steps, you can add the correct <committer> to the final configuration for each site.

Conclusion

As the scale and complexity of your projects grow, so does the challenge of managing multiple configuration files. Herein lies the beauty of harnessing the Apache Velocity Template Engine. By leveraging its power, you can streamline and organize your configurations to minimize redundancy and maximize efficiency. Say goodbye to duplicated efforts, and welcome a more streamlined, manageable, and scalable approach to web crawling. Happy indexing!