This year I was given the privilege to attend my first KubeCon + CloudNativeCon North America 2020 virtually. This event spans four days consisting of virtual activities such as visiting vendor booths, learning about Cloud Native projects, and exploring the advancement of cloud native computing.

The keynote started off by paying respects to the passing of the legendary Dan Kohn. Kohn’s influence has changed how we do online shopping to research on the internet and made ways for the new evolutions of The Linux Foundation and Cloud Native Computing Foundation for an exciting future for many generations to come, while supporting the creation of sustainable open source ecosystems.

There were glitches while live streaming from the virtual conference platform, which was to be expected due to the real-time heavy load test that is not desirable in any production environments. Fortunately, on-demand recordings of the presentations are now available.

Slack channels can be joined from cloud-native.slack.com to communicate with others from channels like #kubecon-mixandmingle and other KubeCon-related topics. This feature provides a great way to connect with the KubeCon audience virtually even after the event is over.

KubeCon provides many 101 learning and tutorial events about the service CNCF projects offer and how it can help with the 3 main pillars that I am involved with daily: automation, dev-ops, and observability. Each of the pillar implementations are usually done in parallel, for instance, continuous development and continuous deployment required automation in building the pipeline, involves creating codes and having knowledge in operations architecture planning. Once deployed, the observability of the services running would be required to monitor for smooth services deliver to users. Many of the projects from the CNCF provide the services front to help create the development flow from committing code that gets deployed into the cloud services and providing monitoring capabilities to the secured mesh services.

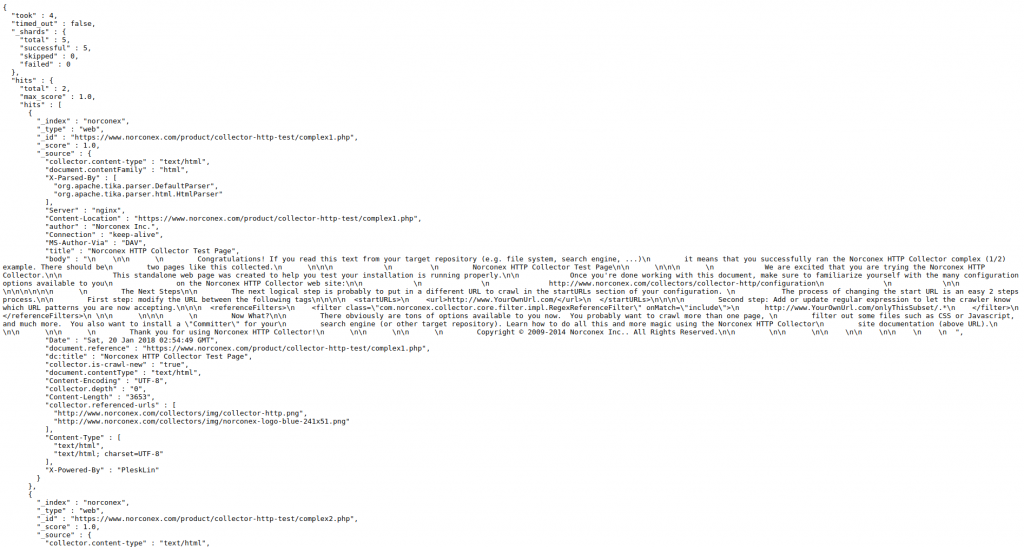

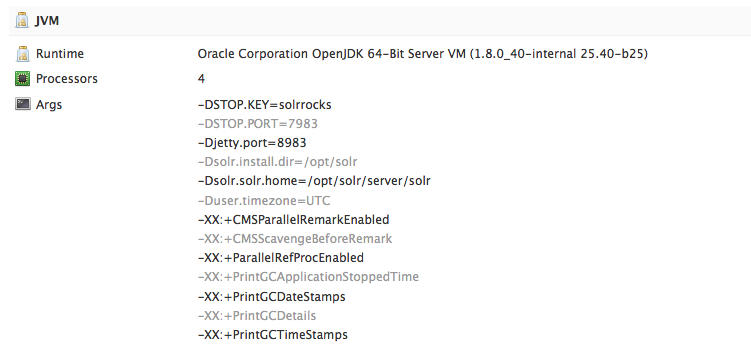

At Norconex, our upcoming Norconex Collector version 3.0.0 could be used with the combination of Containerd, Helm, and Kubernetes and with automating the build and deployment via Jenkins. One way to get started is to figure out how to package the Norconex Collector and Norconex Committer into a container-runnable image with container tools such as Docker to run builds for development and testing. After discerning how to build the container image, I have to decide where to deploy and store the container image registry so that the Kubernetes cluster can pull the image from this registry and run the container image with Kubernetes Cronjob based on a schedule when the job should run. The Kubernetes Job would create Pod to run crawl using the Norconex Collector and commit indexed data. Finally, I would choose Jenkins as the build tool for this experiment to help to automate updates and deployments.

Below are steps that provide an overview for my quick demo experiment setup:

- Demo use of the default Norconex Collector:

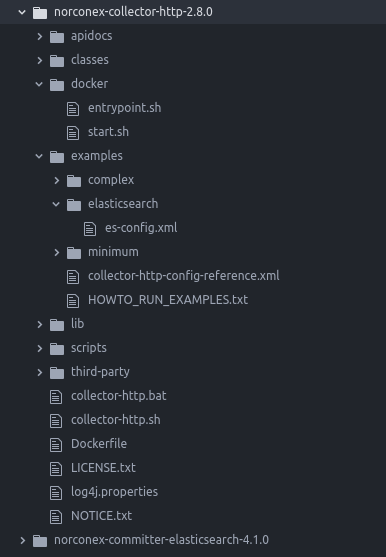

- Download | Norconex HTTP Collector with Filesystem Committer. The other choices of Committers can be found at Norconex Committers

- Build container image using Dockerfile

- Setup a Git repository file structure for container image build

- Guide to build and test-run using the created Dockerfile

- Demo set up locally using Docker Desktop to run Kubernetes

- Tutorials for setting up local Kubernetes

- Demo set up locally using Docker Desktop to run Kubernetes

- Determine where to push the container image; can be public or private image registry such as Docker Hub

- Demo will use Docker Hub public registry

- Create a Helm Chart template using the Helm Chart v3

- Demo will start with default template creation of Helm Chart

- Get the Helm tool here: Helm | Installing Helm

- Demo to use the Kubernetes Node filesystem for persistent storage

- Other storage options can be used, for instance, in AWS use EBS volume or EFS

- Helm template and yaml configuration

- cronjob.yaml to deploy Kubernetes Cronjob that would create new Kubernetes job to run on schedule

- pvc.yaml to create Kubernetes persistent volume and persistent volume claim that the Norconex Collector crawl job will use on the next recrawl job run

- Demo will start with default template creation of Helm Chart

- Simple build using Jenkins

- Overview of Jenkins build job pipeline script

I hope you enjoyed this recap of Kubecon!

More details of the codes and tutorials can be found here:

https://github.com/somphouang/norconex-devops-demo